Importing the dataset

1. I can’t import the dataset. It says that the file is not found. What should I do?

Sol: Make sure that in File Explorer, you are in the folder that contains the file ’Data.csv’ which you want to import. This folder is called the "Working Directory folder".

2. What is the difference between the independent variables and the dependent variable?

Sol. The independent variables are the input data that you have, with each you want to predict something. That something is the dependent variable.

3. In Python, why do we create X and y separately?

Sol. Because we want to work with Numpy arrays, instead of Pandas data frames. Numpy arrays are the most convenient format to work with when doing data preprocessing and building Machine Learning models. So we create two separate arrays, one that contains our independent variables (also called the input features), and another one that contains our dependent variable (what we want to predict).

4. In Python, what does ’iloc’ exactly do?

Sol. It locates the column by its index. In other words, using ’iloc’ allows us to take columns by just taking their index.

5. In Python, what does ’.values’ exactly do? Sol. It returns the values of the columns you are taking (by their index) inside a Numpy array. That is basically how X and y become Numpy arrays.

Missing Data

6. In Python, what is the difference between fit and transform?

Sol. The fit part is used to extract some info of the data on which the object is applied (here, Imputer will spot the missing values and get the mean of the column). Then, the transform part is used to apply some transformation (here, Imputer will replace the missing value by the mean).

7. Is replacing by the mean the best strategy to handle missing values?

Sol. It is a good strategy but not the best one always. It depends on your business problem, on the way your data is distributed, and on the number of missing values. If for example, you have a lot of missing values, then mean substitution is not the best thing. Other strategies include "median" imputation, "most frequent" imputation, or prediction imputation. Prediction Imputation is actually another great strategy that is recommended but that I didn’t cover in Part 1 because it was too advanced to do it in Part 1. This strategy indeed requires understanding Part 3 - Classification. So if you completed Part 3, here is the strategy that is even better than mean imputation: you take your feature column that contains the missing values and you set this feature column as the dependent variable while setting the other columns as the independent variables. Then you split your dataset into a training set and a Test set where the Training set contains all the observations (the lines) where the feature column that you just set as the dependent variable has no missing value and the Test set contains all the observations where your dependent variable column contains the missing values. Then you perform a classification model (a good one for this situation is k-NN) to predict the missing values in the test set. And eventually, you replace your missing values with predictions. A great strategy!

Categorical Data

8. In Python, what do the two ’fit_transform’ methods do?

8. In Python, what do the two ’fit_transform’ methods do?

Sol. When the ’fit_transform()’ method is called from the LabelEncoder() class, it transforms the categories strings into integers. For example, it transforms France, Spain, and Germany into 0, 1 and 2. Then, when the ’fit_transform()’ method is called from the OneHotEncoder() class, it creates separate columns for each different label with binary values 0 and 1. Those separate columns are the dummy variables.

Splitting the dataset into the Training set and Test set

9. What is the difference between the training set and the test set?

Sol. The training set is a subset of your data on which your model will learn how to predict the dependent variable with the independent variables. The test set is the complimentary subset from the training set, on which you will evaluate your model to see if it manages to predict correctly the dependent variable with the independent variables.

10. Why do we split on the dependent variable?

Sol. Because we want to have well-distributed values of the dependent variable in the training and test set. For example, if we only had the same value of the dependent variable in the training set, our model wouldn’t be able to learn any correlation between the independent and dependent variables. 1.5 Feature Scaling

11. Do we really have to apply Feature Scaling on the dummy variables?

Sol. Yes, if you want to optimize the accuracy of your model predictions. No, if you want to keep the most interpretation as possible in your model.

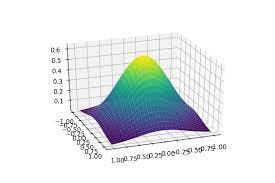

12. When should we use Standardization and Normalization?

Sol. Generally, you should normalize (normalization) when the data is normally distributed, and scale (standardization) when the data is not normally distributed. In doubt, you should go for standardization. However, what is commonly done is that the two scaling methods are tested.

reference: Machine Learning A-Z